AI governance is becoming the central bottleneck for deploying powerful AI systems safely at scale, especially for agentic systems like the ones you’re exploring. Here’s a clear, structured map of the field across ethics → risk → management → regulation → implementation.

1. What “AI Governance” Actually Means

AI governance is how we ensure AI systems are:

- Safe

- Aligned with human values

- Accountable

- Transparent

- Legally compliant

- Societally beneficial

It spans three layers:

| Layer | Question | Example |

| Ethics | What should AI do? | Fairness, privacy, autonomy |

| Risk Management | What could go wrong? | Bias, hallucinations, agent misuse |

| Regulation | What must we legally do? | EU AI Act, NIST AI RMF, ISO standards |

2. Core Ethical Principles in AI

These are globally convergent across the OECD, UNESCO, the EU, NIST, and major labs.

| Principle | Meaning in practice |

| Beneficence | AI should create value, not harm |

| Non-maleficence | Prevent misuse, abuse, and unintended harm |

| Autonomy | Humans remain in control (human-in-the-loop) |

| Justice | Avoid bias and discrimination |

| Explicability | Systems should be explainable/auditable |

| Accountability | Someone is responsible for outcomes |

| Privacy | Data use is controlled and transparent |

These are not philosophy—they now directly map into regulatory requirements.

3. Major Risk Categories in Advanced AI / Agent Systems

For agentic, orchestration-heavy AI, the risk profile differs from that of simple chatbots.

| Risk Type | Example in Agent Systems |

| Autonomy risk | The agent takes unintended actions without oversight |

| Tool misuse | The agent calls APIs, writes code, and accesses data incorrectly |

| Hallucinated authority | The agent invents facts and executes plans based on false info |

| Emergent behavior | Multi-agent interaction produces unexpected outcomes |

| Data leakage | Sensitive data exposed through memory or logs |

| Prompt injection | Agent manipulated through malicious inputs |

| Model drift | System behaviour changes over time, unnoticed |

| Over-reliance | Humans trustthe system too much (automation bias) |

| Regulatory non-compliance | Violating data/AI laws unknowingly |

These risks are why governance is now a central component of agent orchestration.

4. AI Risk Management Frameworks

Four functions:

- Govern – Policies, accountability

- Map – Identify context and risks

- Measure – Test, evaluate, red-team

- Manage – Mitigate and monitor.

When creating AI systems, this is increasingly the standard blueprint.

ISO / IEC Standards (global industry)

- ISO 23894 – AI risk management

- ISO 42001 – AI management system (like ISO 27001 for AI)

- ISO 27001 – Security

- ISO 27701 – Privacy

Similar to security audits, companies will eventually require AI audits

5. Global AI Regulations EU AI Act (most advanced law)

AI systems are classified by risk:

| Level | Example | Requirements |

| Unacceptable | Social scoring | Banned |

| High Risk | Healthcare AI, hiring AI, critical infra | Strict audits, documentation, testing |

| Limited Risk | Chatbots | Disclosure required |

| Minimal | Games | No obligations |

Agentic AI that makes decisions or interacts with systems often falls into high risk.

US Approach

No single law. Uses:

- NIST AI RMF

- FTC enforcement

- Sector laws (health, finance)

- Executive orders on AI safety

India is aligning with: - OECD principles

- Risk-based approach

- Likely adoption of audit + transparency model similar to EU/NIST

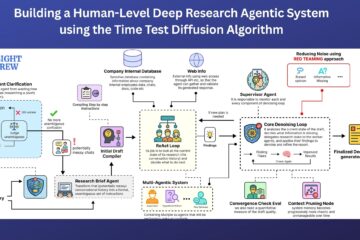

6. Governance Requirements for Agentic/Orchestrated AI Systems

This is where it gets interesting for your research direction.

Modern guidance says agents must have:

A. Human Oversight Layer

- Approval for critical actions

- Kill switch

- Escalation rules

B. Observability & Logging

- Full trace of decisions

- Tool calls logged

- Memory changes tracked

C. Guardrails

- Prompt injection defense

- Tool access control

- Role-based permissions

D. Evaluation & Red Teaming

- Adversarial testing

- Failure mode analysis

- Continuous monitoring

E. Documentation (“Model Cards”, “System Cards”)

- What the system does

- Known limitations

- Risk analysis

7. Ethical vs Legal vs Technical Governance

| Aspect | Ethical | Legal | Technical |

| Bias | Avoid discrimination | Anti-discrimination law | Bias testing datasets |

| Privacy | Respect users | GDPR, DPDP (India) | Data isolation, encryption |

| Safety | Prevent harm | Liability laws | Guardrails, HITL |

| Transparency | Explain behavior | Disclosure requirements | Logs, explainability tools |

Governance is where philosophy meets engineering.

8. Why Governance Is Harder for AI Agents Than LLMs

A static LLM answers questions.

An agent:

- Acts

- Decides

- Uses tools

- Chains reasoning

- Has memory

- Interacts with a real system.

Legal accountability and safety engineering requirements

9. What “Good AI Governance” Looks Like in Practice

Mature AI orgs now implement:

- AI usage policy

- Risk classification of each AI system

- Approval workflow before deployment

- Monitoring dashboards

- Incident response plan for AI failures

- Periodic audits

- Ethics review board

10. The Future: AI Governance as Architecture

We are moving toward:

Governance is built into system design, not added later.

Examples:

- Agents that require approval tokens

- Built-in logging middleware

- Tool permission layers

- Automatic risk scoring of prompts

- Self-monitoring agents

This is a huge research and engineering frontier.

11. Key Takeaway

AI governance is not bureaucracy.

It is the engineering discipline required to safely deploy powerful AI systems.

Especially for:

- Agent orchestration

- Autonomous research systems

- Multi-step reasoning systems.

The system architecture itself incorporates governance.